Overview

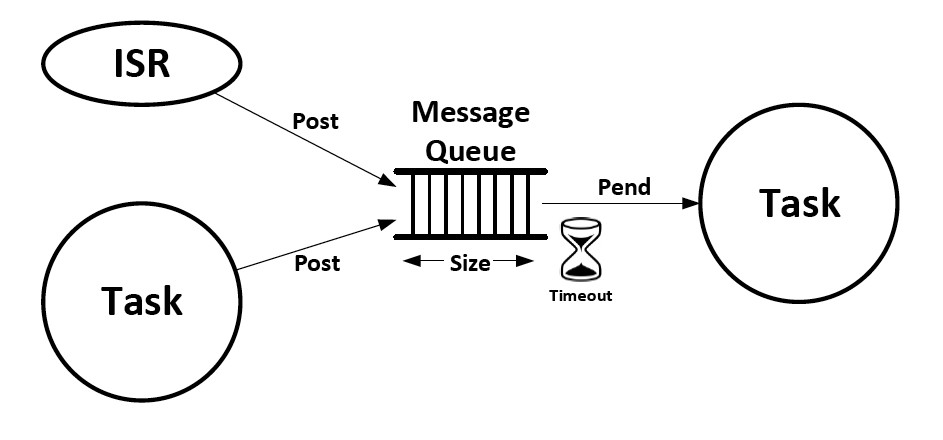

Queues are used as interprocess communication channels in computer systems. A typical arrangement is one-to-one: task A places a message into the queue and task B retrieves messages in a first-in, first-out (FIFO) manner.

Queues are usually sized to hold multiple data items rather than a single item, so they can act as a buffer and provide flexibility for the communication channel. Insert and remove operations can be performed asynchronously as long as the queue is not full. Queues are implemented in RAM. The information passed between processes may be the data itself or a pointer to the data; pointers are commonly used when RAM is constrained. Two common techniques for implementing queues are linked-list structures and circular buffers.

Linked List vs Circular Buffer

A useful property of linked lists is that their size is not fixed and can grow or shrink as needed. Very large queues can be constructed if there is available memory. However, in embedded systems this is often not an advantage: RAM is usually limited, and large FIFO queues that hold many messages can introduce significant latency between data ingress and egress, which may be unacceptable for many real-time applications.

For embedded use, the preferred queue (channel) structure is typically the circular buffer.

Circular Buffer Design

Circular buffers are usually designed to use a fixed amount of memory allocated at creation time. The buffer size is defined when it is created and remains fixed afterward (for example, 10 data slots). Addressing wraps around using modulo arithmetic, so the slot after the last is the first, similar to how a 12-hour clock wraps around.

Rather than moving data during read/write operations, the buffer uses pointers to identify the start and end positions (commonly referred to as the read and write pointers). Data items remain at fixed memory locations; only the pointers are updated on insert and remove operations. These pointers are also used to define queue full and queue empty conditions (for instance, when they are equal).

Blocking and Nonblocking Behavior

Under normal operation, the producer and consumer operate asynchronously, inserting and removing data as required. A task will block only in two cases: when the queue is full (producer blocks) or when the queue is empty (consumer blocks).

Note on Memory Pools vs Queues

An important distinction between a memory pool and a queue is that reading from a memory pool does not alter the content, while reading from a queue consumes the data (a destructive operation). Conceptually this is implemented by advancing the read pointer to the next position.

Queue Usage Summary

The following code snippets summarize typical queue operations in an RTOS environment.

/* Basic queue API */

/* 1. Create a queue */

FOS_QName queue = FOS_CreateQueue(QLength, QItemSize);

/* 2. Get a message from the queue */

FOS_GetFromQueue(queue, &buffer, waitingTime);

/* 3. Send a message to the queue */

FOS_SendToQueue(queue, &data, waitingTime);

Example: Global Queue Connecting Sender Task A and Receiver Task B

/* Use RTOS-provided types */

FOS_QName GlobalQA2B;

FOS_QLength QA2Blength = 1;

FOS_ItemSize QA2BItemSize = 4;

GlobalQA2B = FOS_CreateQueue(QA2Blength, QA2BItemSize);

Example: Send to Queue - Task A

/* Use RTOS-provided types */

long DataForQueueA2B;

const FOS_QwaitTime NoWaiting = 0;

FOS_QloadStatus QLoadState;

QLoadState = FOS_SendToQueue(GlobalQA2B, &DataForQueueA2B, NoWaiting);

Example: Get from Queue - Task B

/* Use RTOS-provided types */

long DataFromQueueA2B;

const FOS_QwaitTime NoWaiting = 0;

FOS_QreadStatus QreadState;

QreadState = FOS_GetFromQueue(GlobalQA2B, &DataFromQueueA2B, NoWaiting);

ALLPCB

ALLPCB