Introduction

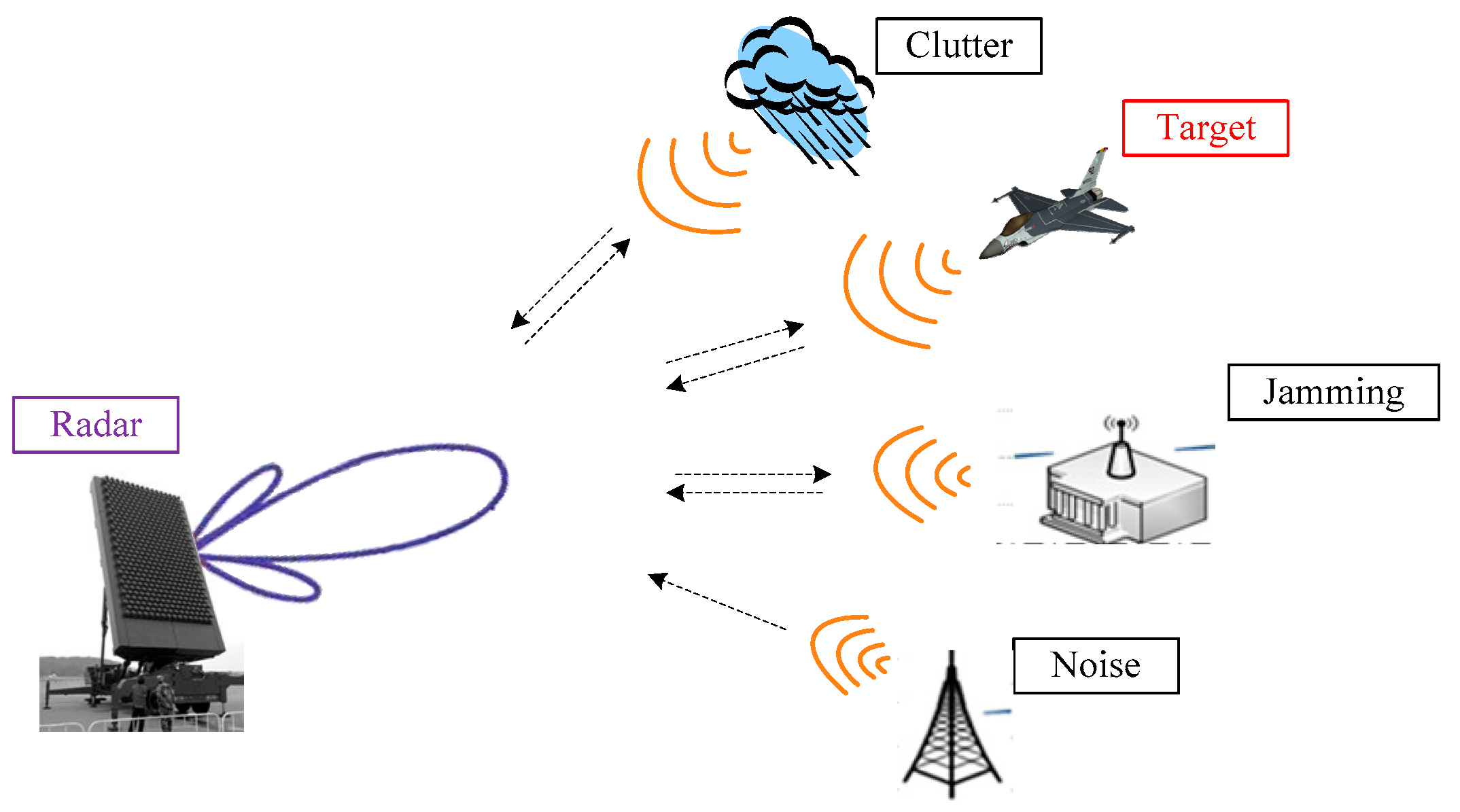

Deep learning has become one of the most prominent technologies in recent years, delivering strong performance in prediction, classification, and clustering across areas such as computer vision, natural language processing, and speech recognition. This article briefly examines the application of deep learning methods to radar target detection.

1. Limitations of Traditional Detection Methods

Traditional radar target detection methods typically model the statistical properties of radar echo signals and then make presence/absence decisions for targets under noise and clutter. Typical algorithms include likelihood ratio tests (LRT), track-before-detect (TBD), and constant false alarm rate (CFAR) techniques.

In practical deployments, however, the statistical properties of radar echoes can be complex and variable, making model matching difficult. Detection models and algorithm parameters tend to depend strongly on radar type, system characteristics, and the surrounding environment. System parameters are hard to quantify across different operational scenarios, and detection performance is often unsatisfactory.

2. Feasibility Analysis of Deep Learning Approaches

Image-based object detection is a major research area in computer vision. With rapid advances in deep learning, detection algorithms have shifted from hand-crafted feature methods to deep convolutional neural network approaches, improving accuracy and robustness. Representative models include R-CNN, Fast R-CNN, Faster R-CNN, and YOLO, which have seen practical use in civilian and defense contexts.

For radar target detection, radar imagery contains less information than optical images and differs in imaging mechanisms, target characteristics, and resolution. However, as radar technology improves spatial and Doppler resolution, echo image quality and information content have increased. Theoretically, deep learning-based radar target detection is feasible and presents a new approach to overcoming the limitations of traditional algorithms.

3. Algorithm Overview

The general block diagram for deep learning-based target detection comprises data feature preprocessing, feature extraction, classification, and detection decision stages.

Data feature preprocessing converts frame-by-frame B-scan echo images into a feature space by applying statistical methods to produce quantified inputs for the algorithm. A deep neural network then extracts features; interference, clutter, noise, and targets tend to differ along certain feature dimensions, enabling a classifier to distinguish targets from interference and clutter. The detection decision module makes final judgments on candidate detections to produce tracks or detection points.

Current object detection approaches are broadly categorized into two-stage detectors and single-stage detectors.

3.1 Two-Stage Detectors

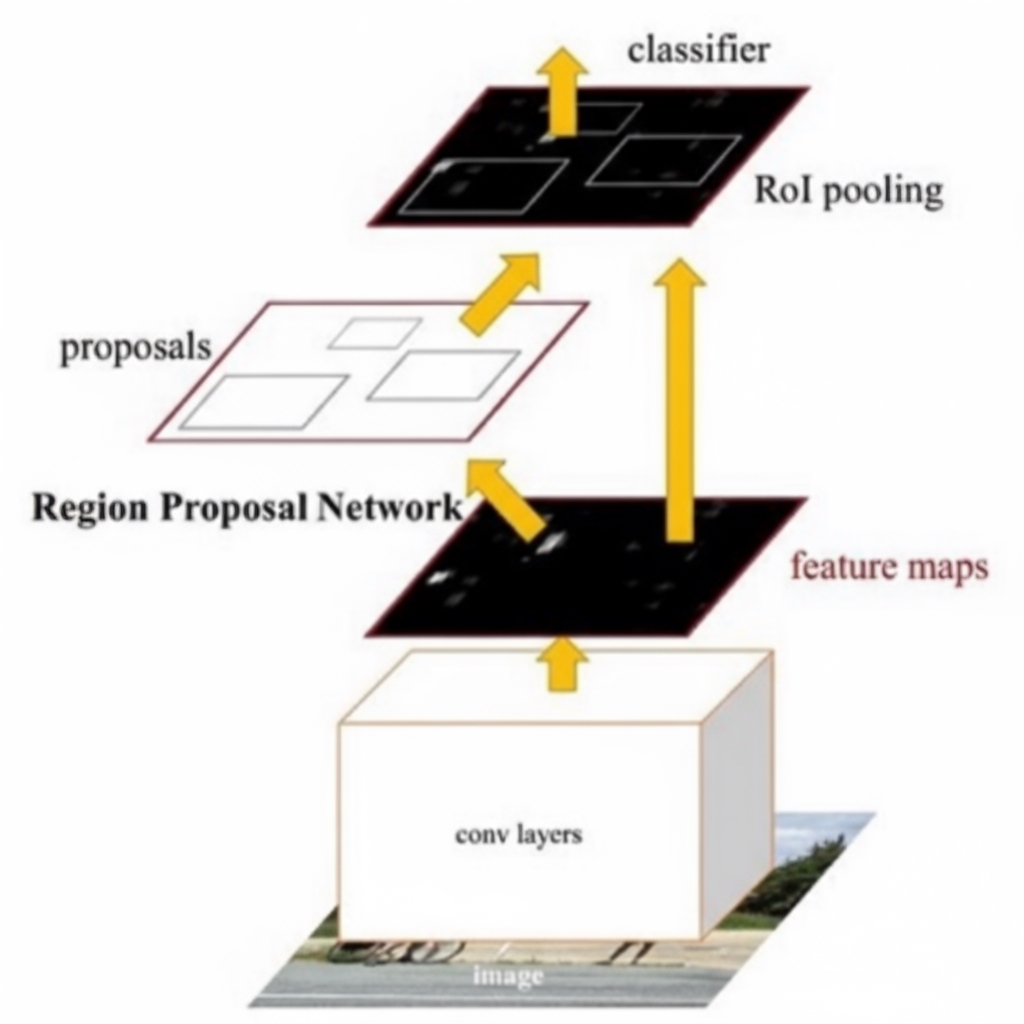

Two-stage detectors first generate candidate regions, then apply deep learning-based feature extraction and classification to those regions. A representative model is Faster R-CNN, though its detection speed may not meet real-time requirements in many cases. The Faster R-CNN architecture is illustrated below.

Faster R-CNN detection can be divided into four modules:

- (1) conv layers: the feature extraction network that produces feature maps via a series of conv + ReLU + pooling layers for use by the RPN and proposal selection.

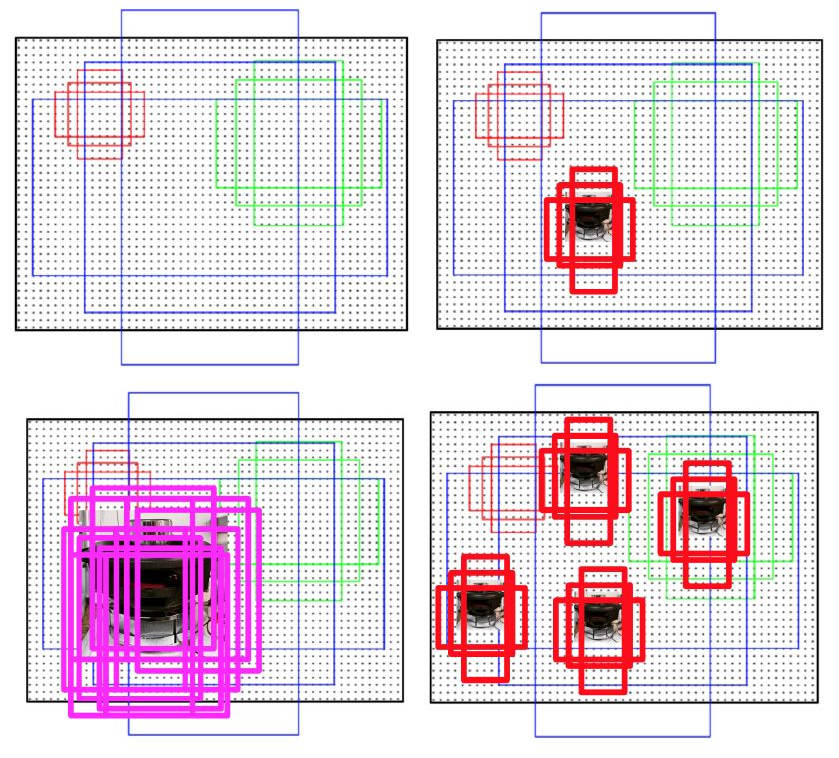

- (2) RPN (Region Proposal Network): replaces selective search to generate candidate boxes. Tasks include classification (labeling predefined anchors as positive or negative) and bounding box regression to refine anchors into accurate proposals.

- (3) RoI Pooling: gathers proposals generated by the RPN and extracts corresponding regions from the feature maps produced in (1) to form proposal feature maps for downstream fully connected layers for classification and regression.

- (4) Classification and Regression: computes the final class labels from proposal feature maps and performs a final bounding box regression to produce precise detection boxes.

3.2 Single-Stage Detectors

Single-stage detectors perform end-to-end detection with a single forward pass, greatly increasing detection speed. A representative model is YOLO (You Only Look Once).

Using the YOLOv5 model as an example, its structure includes the following components:

(1) Input/head: the YOLOv5 head has three distinct output layers responsible for detecting large, medium, and small-scale objects.

(2) Backbone: YOLOv5 uses CSPDarknet53 as its backbone to provide strong feature extraction and computational efficiency.

(3) Neck: YOLOv5 uses an FPN-like neck to fuse information from different feature map levels.

(4) Output: loss function and postprocessing. YOLOv5 uses a Focal Loss variant to address class imbalance and applies non-maximum suppression (NMS) to remove overlapping boxes and produce the final detections.

Overall, the YOLOv5 structure is relatively compact and incorporates techniques such as CSP, FPN, Mish activation, and Focal Loss to improve performance and robustness.

4. Application Example

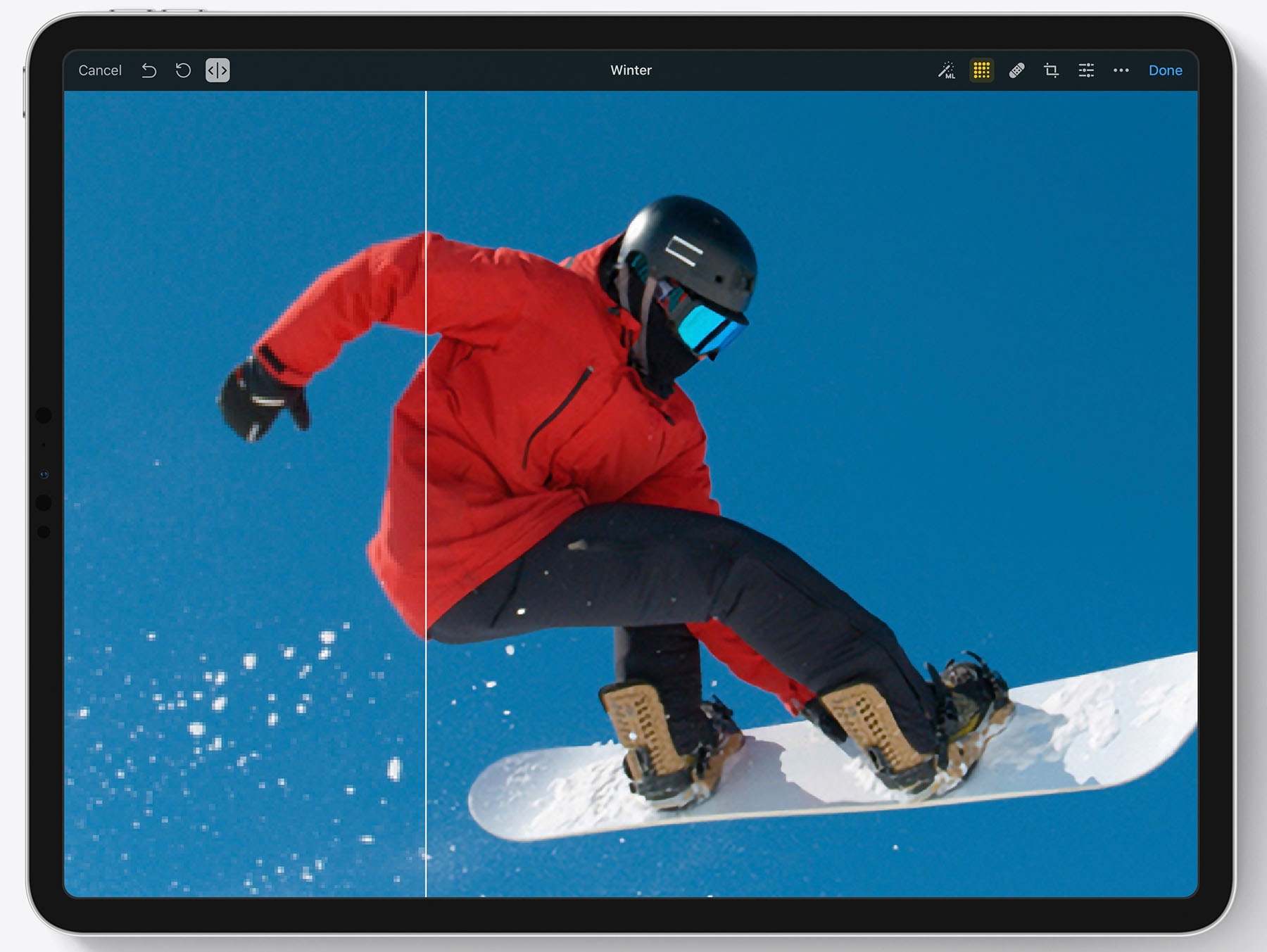

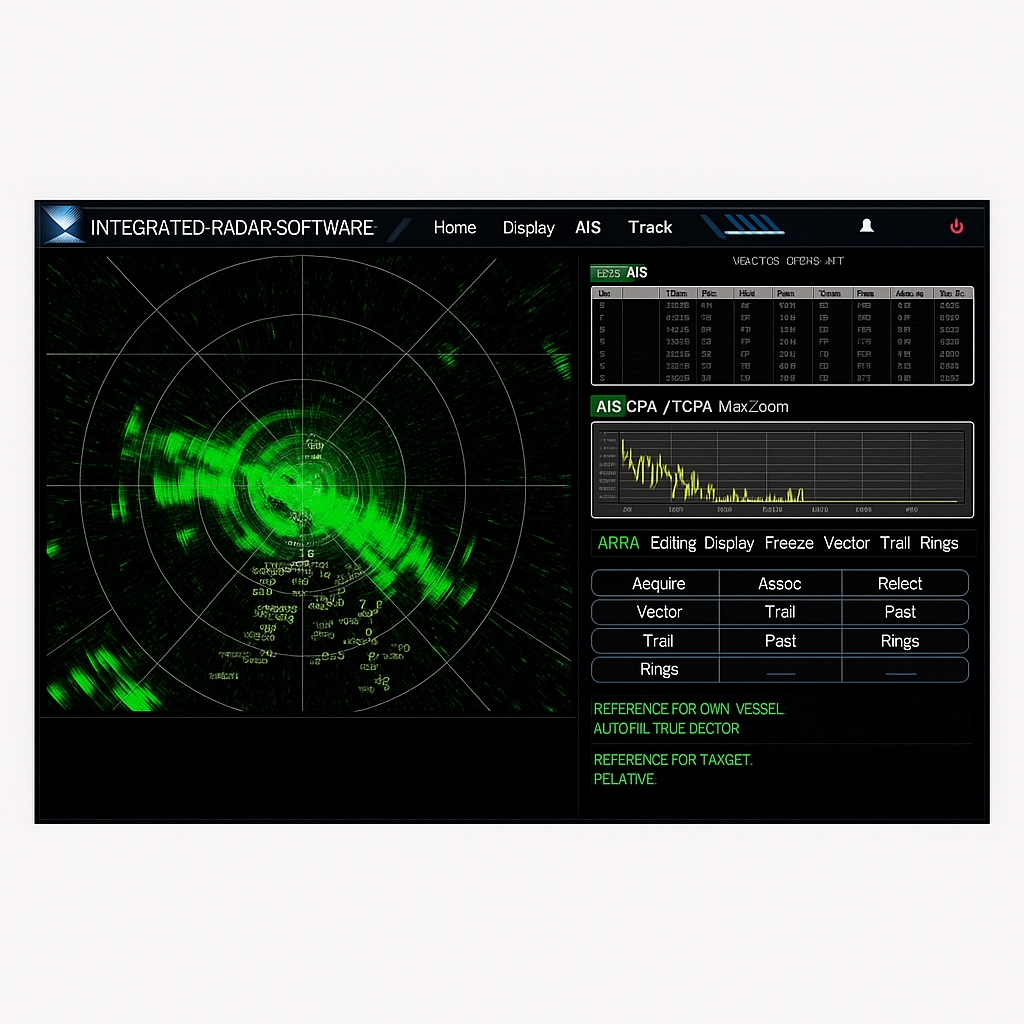

As an example, a YOLOv5 model was trained on 100 samples and deployed on a radar installed at a coastal test range. Automatic detection and tracking outputs are shown below.

5. Conclusion

Practical results indicate that deep learning-based radar target detection is feasible. Compared with traditional methods, it can significantly improve detection probability in cluttered regions, reduce the false alarm rate, and enhance overall detection accuracy, offering meaningful directions for further research.

ALLPCB

ALLPCB