Overview

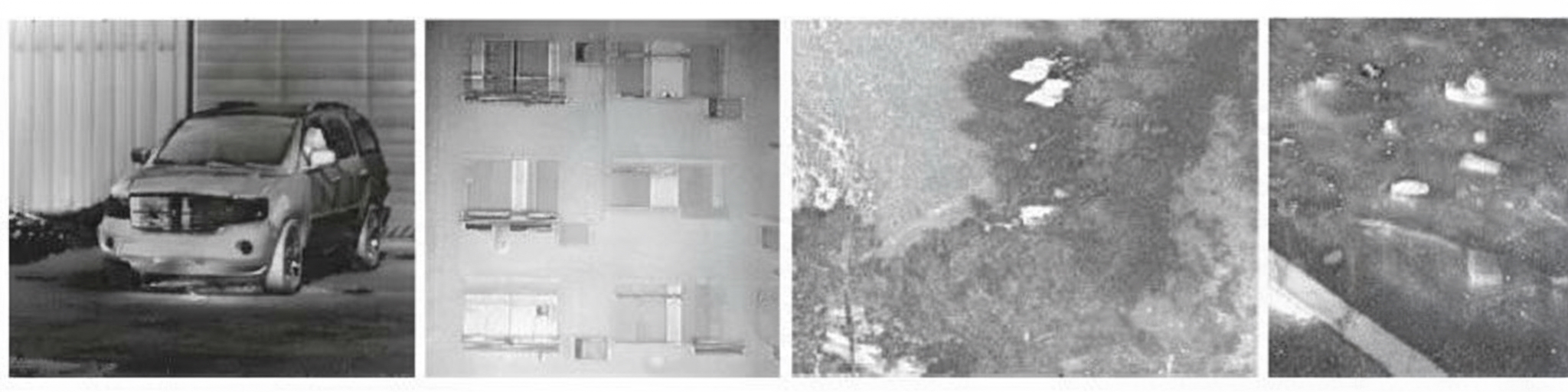

Polarization image fusion aims to improve overall image quality by combining spectral information and polarization information. It has applications in image enhancement, remote sensing, target recognition, and defense.

According to a recent report, a research team from the School of Electronic Information Engineering at Changchun University of Science and Technology published an article in the journal Infrared Technology titled "Deep Learning Polarization Image Fusion: Research Status". The first and corresponding author is Professor Duan Jin.

This article reviews traditional fusion methods based on multi-scale transform, sparse representation, and pseudocolor, and focuses on the current state of deep learning methods for polarization image fusion. It first summarizes progress based on convolutional neural networks and generative adversarial networks, then presents related applications in object detection, semantic segmentation, image dehazing, and 3D reconstruction, compiles publicly available high-quality polarization image datasets, and concludes with prospects for future research.

Traditional Polarization Fusion Methods

Multi-scale transform

Multi-scale transform (MST) based methods for polarization image fusion were developed early and are widely used. In 2016, a method was proposed to fuse infrared polarization and intensity images, preserving all features of the infrared intensity image and most features of the polarization image. In 2017, a multi-algorithm collaborative fusion approach combining discrete wavelet transform (DWT), non-subsampled contourlet transform (NSCT), and an improved principal component analysis (PCA) was proposed, leveraging the complementary relationships among the three algorithms to retain important targets and texture details from source images. In 2020, a method based on wavelet and contourlet transforms addressed insufficient information retention, polarization interference to visual observation, and suboptimal texture details. The same year, a wavelet-based fusion method tailored to mid-wave infrared polarization characteristics selected different fusion rules for high- and low-frequency components to obtain high-resolution fused images. In 2022, an underwater polarization image fusion algorithm produced fused images with prominent details and higher clarity. In 2023, a multi-scale structural decomposition method decomposed infrared and polarization images into average intensity, signal intensity, and signal structure, applying different fusion strategies to each component; experiments showed better texture preservation, improved contrast, and artifact suppression.

Sparse representation

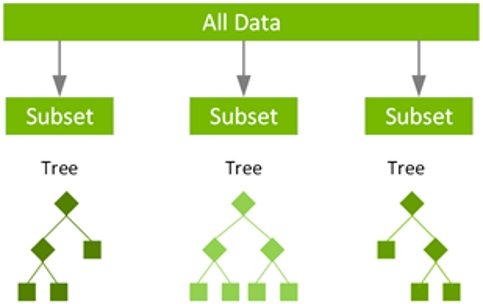

Sparse representation (SR) based fusion methods exploit image sparsity by decomposing images into a base matrix and sparse coefficient matrix, then reconstructing using inverse sparsity principles to fully integrate polarization and spatial information. In 2015, a general fusion framework combining MST and SR was proposed and validated on multi-focus, visible-infrared, and medical image fusion tasks, with comparisons to six multi-scale analysis methods. In 2017, Tianjin University proposed a fusion method combining dual-tree complex wavelet transform (DTCWT) and SR, using an absolute-maximum strategy for high-frequency fusion and using sparse coefficient location information to handle common and unique low-frequency features, yielding fused images with high contrast and detail. In 2021, a method combining bivariate two-dimensional empirical mode decomposition and SR for infrared polarization fusion fused high-frequency components with an absolute-maximum strategy to preserve detail, extracted common and new low-frequency features via SR, and combined them using specific fusion rules, achieving advantages in both visual quality and quantitative metrics, as shown in Figure 1.

Shallow neural networks

Shallow neural network based polarization fusion methods mainly use pulse-coupled neural networks (PCNN), which transmit and process information via pulse-coded signals, and are often combined with multi-scale transforms. In 2013, an improved PCNN model for polarization image fusion used polarization parameter images to generate fused images with target details and applied a matching degree M as the fusion rule, producing high-detail fused images. In 2018, a fusion method combining bivariate empirical mode decomposition (BEMD) and adaptive PCNN first fused linear degree of polarization and polarization angle to obtain polarization feature maps, then decomposed and fused them with intensity images; high- and low-frequency components were fused using locally adaptive energy and regional variance strategies, yielding advantages across several evaluation metrics. In 2020, an underwater polarization fusion method combining non-subsampled shearlet transform and a parameter-adaptive simplified PCNN detected more underwater target details and salient features, improving both subjective and objective evaluations, as shown in Figure 2.

Pseudocolor

Pseudocolor fusion converts thermal radiation or other target information into color representations aligned with human visual perception, enhancing imaging effect. In 2006, airborne polarization images at 665 nm were colorized to distinguish land, sea, and buildings. In 2007, a pseudocolor mapping and linear degree of polarization entropy based adaptive weighted multi-band polarization fusion method combined Stokes and DoLP images across bands to suppress background clutter. The same year, a method integrating pseudocolor mapping and wavelet transform was applied to pan-sharpening and spectral fusion tasks, enhancing target-background contrast while preserving target information. In 2010, a fusion method combining nonnegative matrix factorization and the IHS color model improved color expression and target detail enhancement. Subsequent methods used color transfer and clustering segmentation to produce perceptually consistent fused images that improve target contrast. In 2012, a pseudocolor fusion method for infrared polarization and infrared intensity images demonstrated clear advantages across multiple metrics. Recent years have seen significant contributions from researchers at Beijing Institute of Technology in this area.

Comparison of traditional methods

The four categories above each have strengths and weaknesses; in practical applications, these methods are often combined according to the scenario to design fusion algorithms.

Deep Learning Polarization Fusion Methods

Convolutional neural network based methods

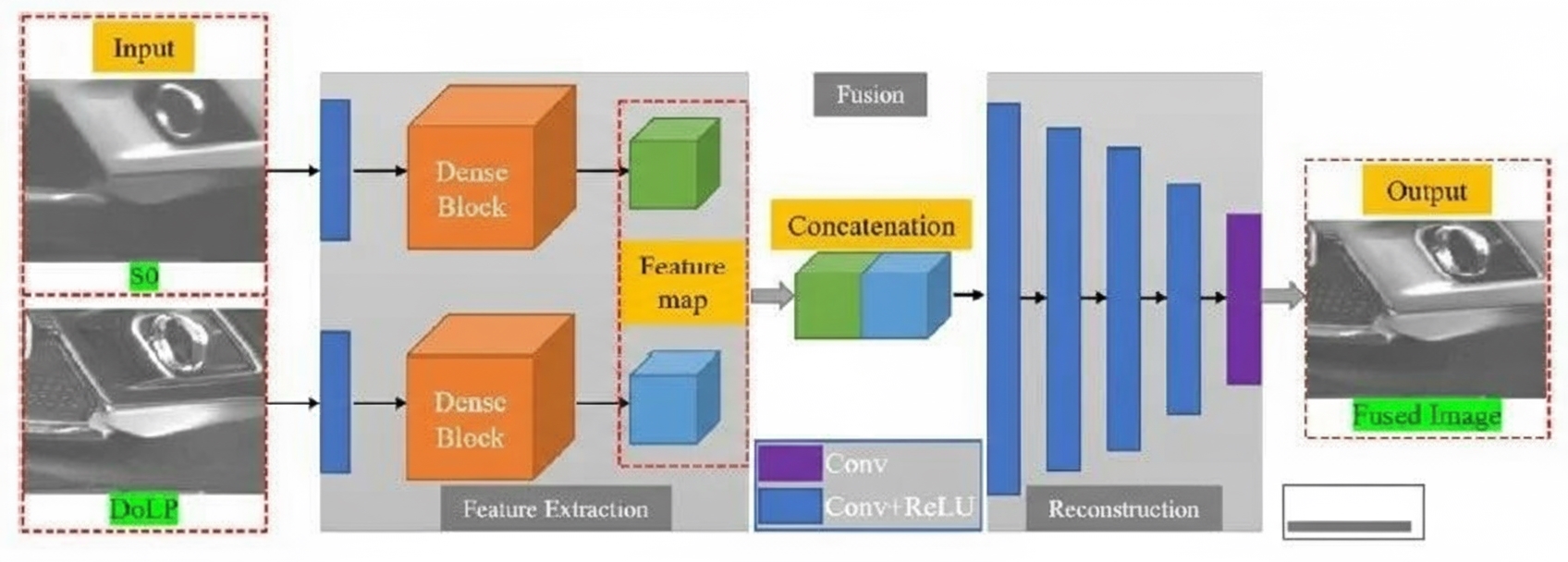

CNN-based polarization fusion methods show prominent fusion performance. In 2017, a two-stream CNN was proposed for hyperspectral and SAR fusion, demonstrating an ability to balance and integrate complementary information from source images. In 2020, Central South University proposed an unsupervised deep network (PFNet) for single-band visible polarization fusion. PFNet includes feature extraction, fusion, and reconstruction modules and employs dense blocks and a multi-scale weighted structural similarity loss to improve performance. PFNet learns an end-to-end mapping to fully fuse intensity and degree-of-polarization images without requiring ground-truth fused images, avoiding complex manual fusion rules.

In 2021, a fusion algorithm combining NSCT and CNN used fast guided filtering and PCNN to denoise polarization angle images, fused them with linear degree of polarization to obtain polarization feature maps, and then generated fused images via multi-scale transform. That year, Central South University also improved PFNet with a self-learning deep convolutional network. Compared with PFNet, the main modification introduced a fusion subnetwork replacing simple connections. The fusion subnetwork adopted a residual-inspired design with three convolutional layers and ReLU activations, as shown in Figure 4, and used a modified cosine similarity loss instead of mean absolute error to better measure differences between fused features and encoded features. Models trained on polarization image datasets also demonstrated effectiveness on other modality fusion tasks.

In 2022, an unsupervised pixel-guided fusion network with attention mechanism defined the information to be fused as highly polarized target information and texture information from intensity images. A specially designed loss constrained the pixel distributions of different images to better preserve salient information at the pixel level, and an attention module addressed inconsistencies between polarization and texture distributions. Experiments showed richer polarization information and improved luminance in fused results. In 2023, a method fused intensity and degree-of-polarization images using an encoder to extract semantic and texture features, an additive strategy and residual network to combine features, and an improved loss function to guide training.

Generative adversarial network based methods

Generative adversarial networks, introduced in 2014 by Goodfellow et al., consist of a generator and a discriminator. The generator models the data distribution while the discriminator evaluates whether samples are real or generated. When the two reach equilibrium, the generator produces samples resembling the real distribution. In 2019, formulating visible-infrared fusion as an adversarial game between generator and discriminator led to FusionGAN, which significantly enhanced texture details and opened new research directions for image fusion. GAN-based fusion methods then developed rapidly; to balance information differences between source images, some approaches adopted dual-discriminator architectures to estimate two source distributions and improve fusion quality.

In 2019, a GAN that learned and classified source image features achieved better visual quality and overall classification accuracy compared with other algorithms. In 2022, a generative adversarial fusion network was proposed to learn the relationship between polarization information and object radiance, showing the feasibility of introducing polarization into deep-learning-based image restoration; the method effectively removed backscatter and aided radiance recovery. The same year, Changchun University of Science and Technology proposed a semantic-guided dual-discriminator polarization fusion network. The network comprised one generator and two discriminators; the two-stream generator extracted features and combined semantic-object-weighted fusion to produce fused images, while the dual discriminators evaluated semantic targets in degree-of-polarization and intensity images. The authors also designed a polarization information quantity discrimination module to guide fusion by weighting and selectively preserving polarization information from different materials. Experiments indicated improved visual quality, quantitative metrics, and benefits for higher-level vision tasks.

Comparison of deep learning methods

CNN- and GAN-based polarization fusion algorithms have different characteristics; detailed comparisons are available in relevant literature tables.

Applications of Polarization Fusion

Combining spectral and polarization information better reflects material properties, enhances target detail, and improves visual quality, helping to increase accuracy and robustness for downstream vision tasks. The following summarizes applications in object detection, semantic segmentation, image dehazing, and 3D reconstruction.

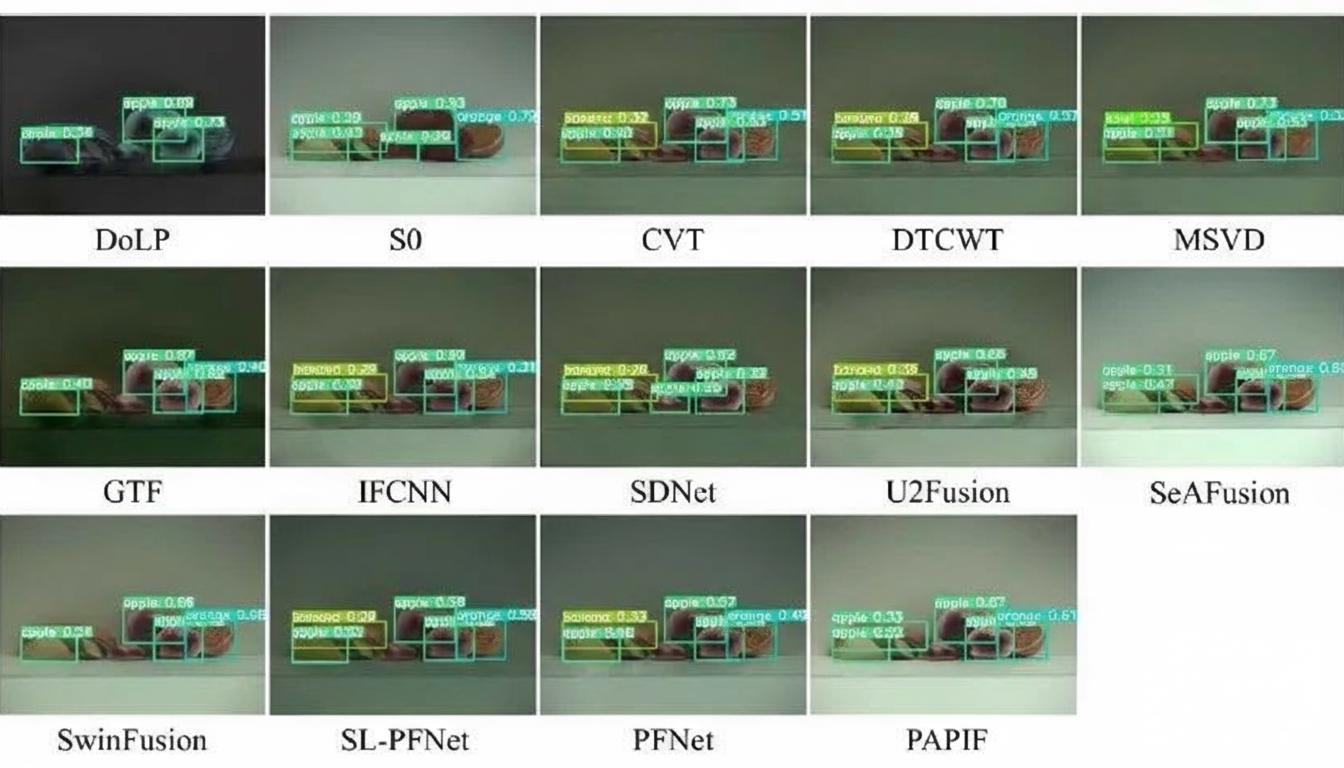

Object detection

Polarization fusion can improve detection rates. In 2020, a fusion method using a polarization feature extractor and consecutive small convolutions in a deep CNN significantly improved detection accuracy, showing lower detection error than traditional methods. In 2022, YOLO v5s was used to detect targets on source images and compared with 11 other methods, demonstrating performance improvements; results are shown in Figure 5.

Semantic segmentation

Polarization fusion can enhance semantic segmentation performance. In 2019, a multi-stage complex-modality network fused RGB and polarization images through a late-fusion framework to improve segmentation. In 2021, an attention fusion network that adapts to various sensor combinations significantly increased segmentation accuracy. In 2022, experiments using DeepLabv3+ on polarization intensity, linear degree of polarization, and fused images showed that fused images improved segmentation accuracy by 7.8% over intensity images, enabling more accurate segmentation.

Image dehazing

In dehazing research, polarization information helps recover transmission and depth, improving dehazing results. In 2018, a multi-wavelet fusion polarization-based dehazing method fused high- and low-frequency coefficients using different rules to highlight target contours and details; experiments in smoky environments showed advantages in visual and objective evaluations, improving target recognition in haze. In 2021, a spatial-frequency-based polarization dehazing method addressed long-range dense haze with good results. The same year, a multi-scale singular value decomposition based polarization fusion dehazing algorithm demonstrated adaptability and robustness, effectively mitigating halos and overexposure.

3D reconstruction

Polarization dimension provides texture and shape cues that improve 3D reconstruction and normal estimation. In 2020, combining polarization shape modeling with deep learning by embedding physical models into network architectures achieved minimal test errors across conditions. In 2021, deep networks addressed angular ambiguity in polarization normal computation, fusing optimized polarization normals with image features to estimate surface normals with high accuracy. In 2022, a low-texture 3D reconstruction algorithm fused polarization and light-field information to resolve azimuth ambiguity by increasing image information dimensionality.

Polarization Image Datasets

Deep learning fusion networks depend on high-quality datasets, and dataset size and quality strongly influence training. Public high-quality polarization datasets remain limited. To support research, several China-based and international research teams have released datasets.

In 2019, a dataset of 120 image groups was captured, each group containing intensity images at polarization angles 0°, 45°, 90°, and 135°. In 2020, Tokyo Institute of Technology released a dataset of 40 scenes of full-color polarization images, each group containing four RGB images at different polarization angles. The same year, a long-wave infrared polarization dataset of 2,113 annotated images captured with a self-developed uncooled infrared DoFP camera was released, covering daytime and nighttime urban roads and highways.

In 2021, Zhejiang University released a campus road dataset of 394 pixel-aligned annotated color-polarization images, including RGB, disparity, and label images; Central South University captured a dataset of 66 polarization image groups containing buildings, vegetation, and vehicles; King Abdullah University of Science and Technology released a polarization dataset of 40 color scenes, each with four intensity images at different polarization angles. Brief descriptions of these datasets are available in the literature.

Summary and Outlook

Polarization image fusion has attracted growing attention. Fusing different spectral and modality images with polarization enables complementary use of advantages across sources. Deep learning fusion methods mitigate some limitations of traditional approaches and show advantages in subjective and objective evaluations, becoming a primary research direction. However, current deep learning polarization fusion methods are relatively few, often involving direct use or modest modification of existing networks, with limited analysis based on polarization imaging principles and material polarization properties. There is room for advancement in theory, application, and experimental validation. Future research directions include:

1) Constructing polarization bidirectional reflectance distribution function models (pBRDF) that exploit polarization differences between target and background to analyze interactions between polarized imaging and transmission media, and building end-to-end polarization-transmission-detection optical imaging models to improve polarization feature extraction and fusion.

2) Deepening the study of target polarization characteristics and their representations to fully exploit deep learning, mining nonphysical and deep polarization features, and integrating polarization optical properties into fusion, combining traditional and deep-learning fusion for improved feature-level polarization fusion.

3) Promoting information sharing of polarization multispectral datasets and developing deep fusion networks capable of few-shot training, since deep learning typically requires large datasets while public high-quality polarization datasets are limited.

4) Exploring new multi-source fusion strategies that combine complementary information from intensity, spectral, and polarization data to improve fusion performance and support downstream tasks such as segmentation, classification, and detection.

ALLPCB

ALLPCB