Overview

Many DSP systems are real-time, so designers must optimize at least one performance metric, and often several. Optimizing all metrics simultaneously is difficult and usually impossible. For example, making an application run faster may increase memory usage, and reducing memory may reduce speed. Designers must balance these trade-offs according to system goals.

Choosing Which Metrics to Optimize

Which metric or set of metrics is most important depends on the developer's objectives. Optimizing for throughput or latency can allow use of a slower or lower-cost DSP to perform the same workload. In embedded products, such cost savings can affect product viability. Developers may instead optimize to enable additional features. If extra features improve overall system performance or allow scaling (for example, adding channels to a base station), that can be valuable.

Reducing memory footprint lowers overall system cost by reducing memory requirements. Power optimization allows longer operation on the same battery capacity, which is important for battery-powered applications. It also reduces power-supply and cooling requirements for the system.

Understanding Trade-offs

A difficult part of optimizing DSP applications is understanding trade-offs among performance metrics. For example, optimizing for execution speed often increases memory use while reducing power per operation. Memory optimizations can reduce power by lowering memory accesses, but may also reduce code performance. Before attempting any optimization, the trade-offs and system objectives must be understood and measured.

Make the Common Case Fast

A fundamental rule in computer and DSP system design is to make the common case fast and to support frequent operations efficiently. This is essentially Amdahl's law: the performance gain from accelerating a particular execution mode is limited by the fraction of time that mode is used. Do not spend effort optimizing code that rarely runs. A small optimization in a loop that executes thousands of times will have much greater impact than optimizing an infrequently used path.

Sum-of-Products and DSP Hardware Support

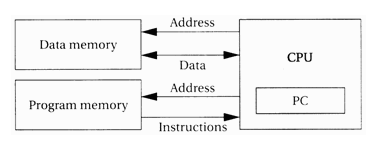

DSP algorithms are often dominated by multiply-and-add iterations. This pattern is commonly called sum-of-products (SOP). DSP processor designers provide hardware features to execute SOP efficiently, such as single-cycle multiply-accumulate (MAC) instructions, architectures that allow multiple memory accesses per cycle (Harvard architecture), and special loop-control hardware that handles loop counts with low overhead.

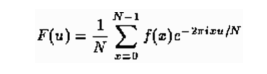

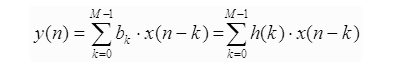

1. DSP algorithms consist of repeated multiplication and addition operations, as seen in discrete Fourier transform formulas.Filter algorithms follow similar iterative multiply-and-add patterns.

2. Harvard architecture. Separating program and data memory improves performance for DSP applications.

ALLPCB

ALLPCB