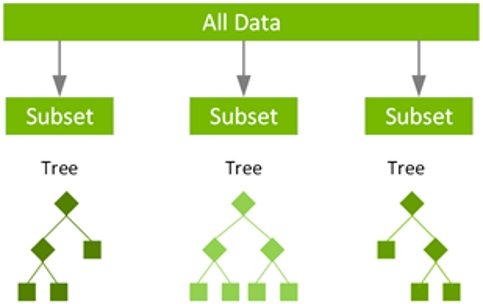

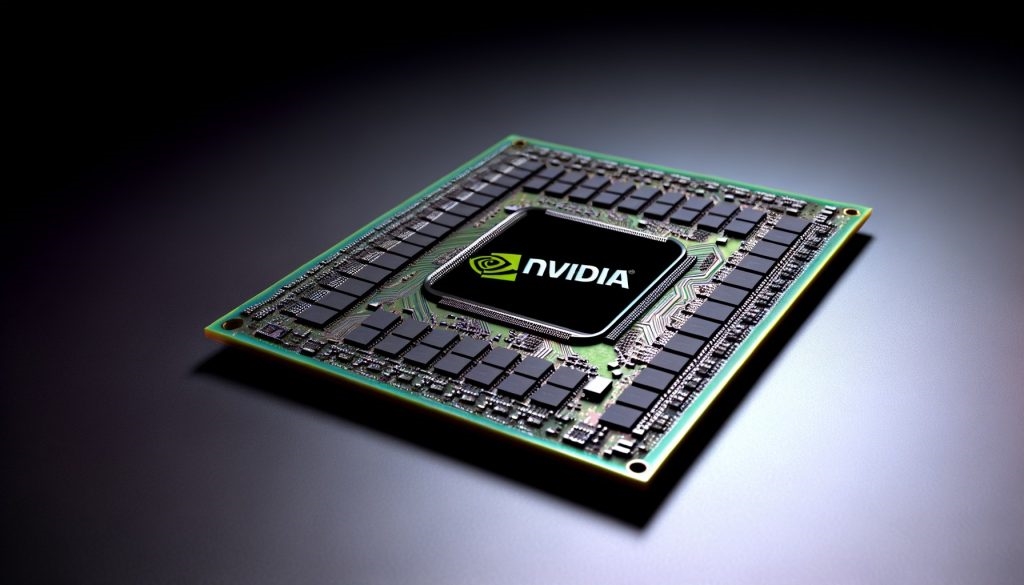

Random Forest: What It Is and How It Works

Technical overview of random forest algorithms, bagging, bias-variance tradeoffs, and GPU-accelerated implementations (RAPIDS) for faster model training.

Technical overview of random forest algorithms, bagging, bias-variance tradeoffs, and GPU-accelerated implementations (RAPIDS) for faster model training.

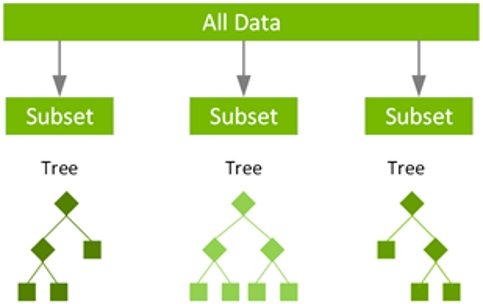

Survey of techniques for small object detection and face detection: image pyramids, FPNs, data augmentation, anchor strategies, SNIP/SNIPER training and context modeling.

Technical analysis of Nvidia's GPU roadmap: annual cadence with H200/B100/X100, One Architecture, SuperChip design and NVLink interconnect evolution.

AI super-resolution and upscaling: GPU and transfer-learning advances, training-data limits, and applications in satellite, medical, gaming, and video-conferencing.

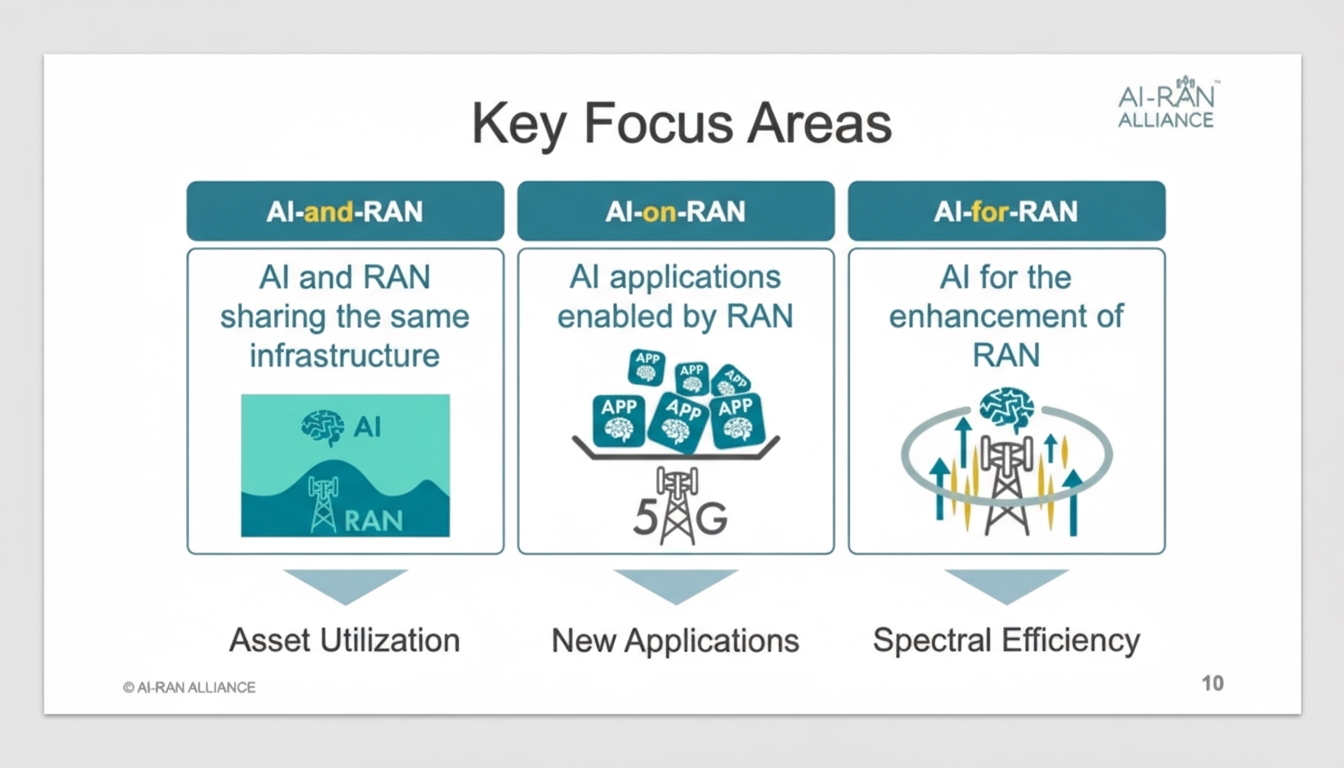

Overview of the AI-RAN Alliance formed at MWC 2024, its goals to integrate AI into radio access networks for 5G/6G, edge AI deployment, and contrast with OpenRAN.

Comprehensive review of polarization image fusion and deep learning methods (CNN, GAN), traditional algorithms, datasets, applications, and future research directions.

Overview of Synopsys VSO.ai integration into VCS and its AI-driven verification methods to accelerate coverage convergence, infer coverage, and reduce redundant regressions.

Overview of AI memory demands and new technologies: capacity, bandwidth, latency, power, reliability, and adoption challenges for future AI systems.

Analysis of large-model scaling: how parameter count and training tokens drive compute requirements, showing compute grows ~quadratically with model size.

Microsoft’s government CTO details public sector AI adoption, cloud migration, ethical AI governance, and cybersecurity practices to enable secure, data-driven government services.

Detailed review of a 30k-line NumPy machine learning repository implementing 30+ models with explicit gradient computations, utilities, and test examples.

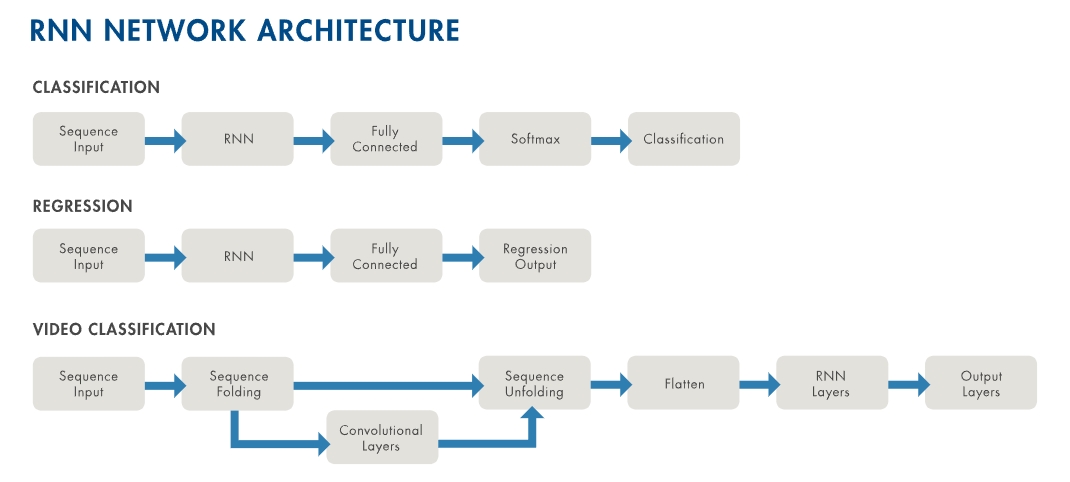

Technical overview of RNNs and LSTM architectures, how they model sequential data, application areas like signal and text processing, and MATLAB-based implementation.

Review of neural network quantization and numeric formats, covering floating vs integer, block floating point, logarithmic systems, and inference vs training trade-offs.

Survey of deep learning approaches for radar target detection, comparing two-stage and single-stage detectors (Faster R-CNN, YOLOv5), preprocessing, and deployment results.

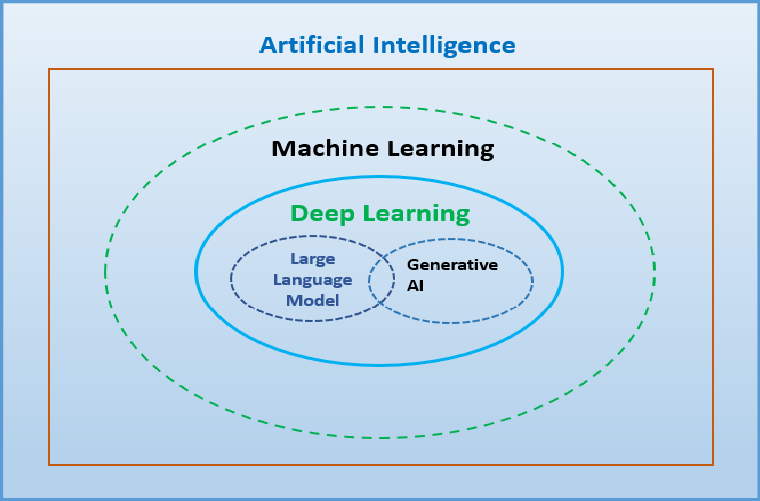

Overview of artificial intelligence and its relationship to machine learning and deep learning, covering AI categories, ML workflow, and common deep architectures.

Overview of ASR (speech-to-text): pipeline, acoustic and language models, CTC training, decoding strategies, and GPU acceleration using NVIDIA NeMo and toolkits.

Technical overview of AI cybersecurity risks: automated attacks, deepfakes, adversarial examples, privacy and data security, ethics, legal challenges, and system fragility.

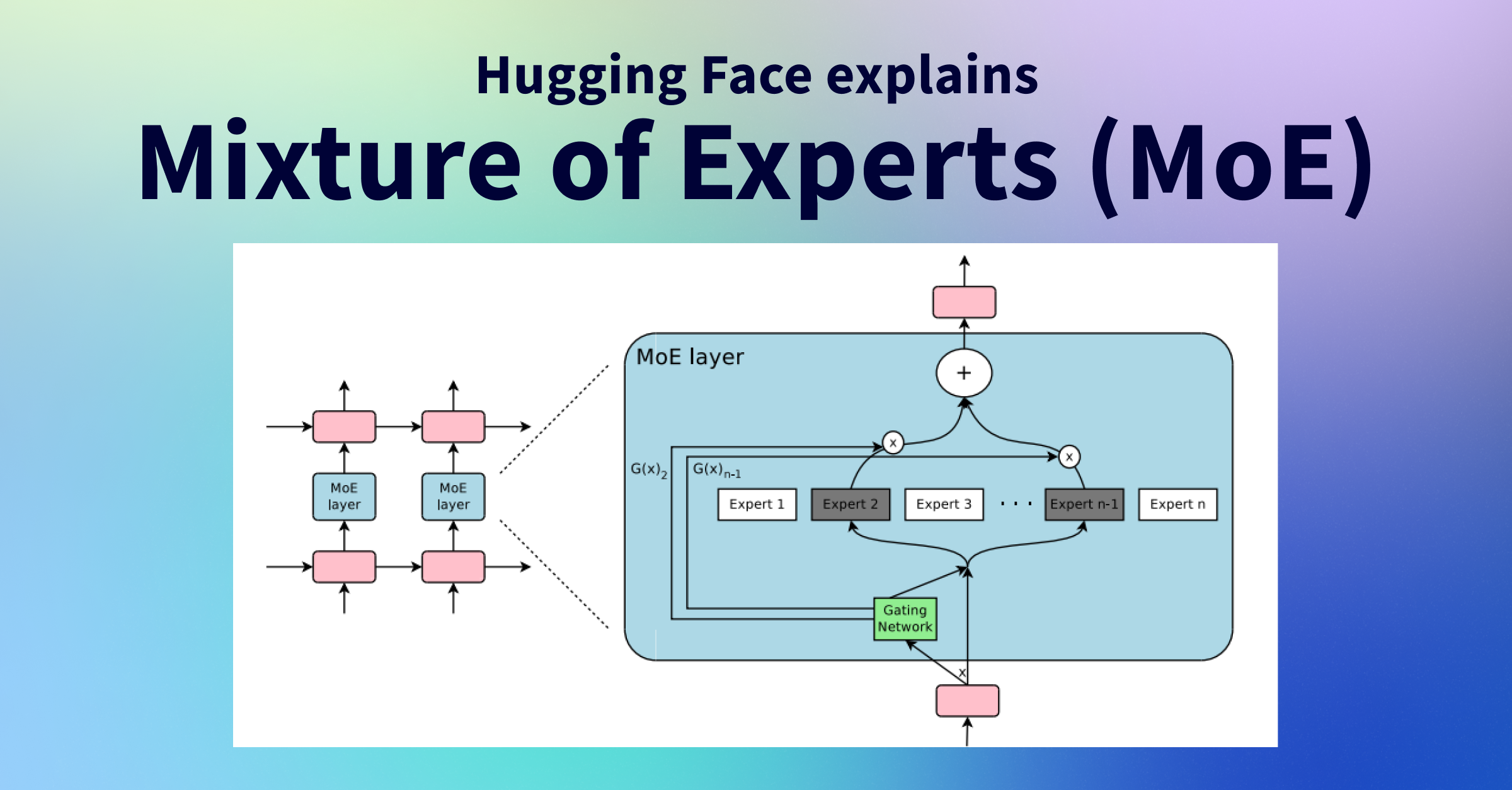

Overview of Mixture-of-Experts (MoE) transformers: sparse routing with gating networks and experts, training and inference trade-offs, and recent Mistral-8x7B-MoE.

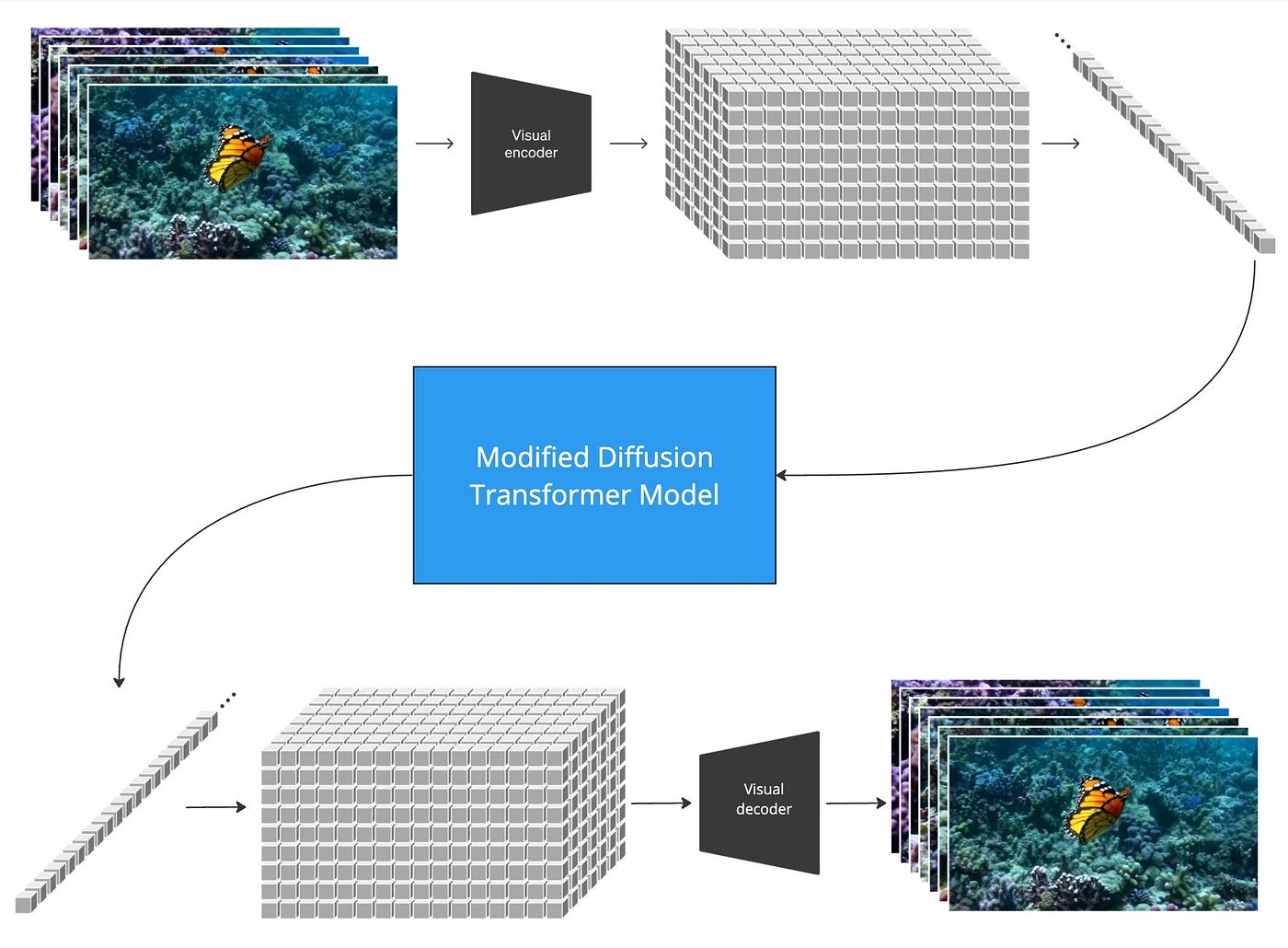

Technical overview of OpenAI's Sora and its video generation capabilities, core machine learning foundations, and potential impacts on production workflows and society.

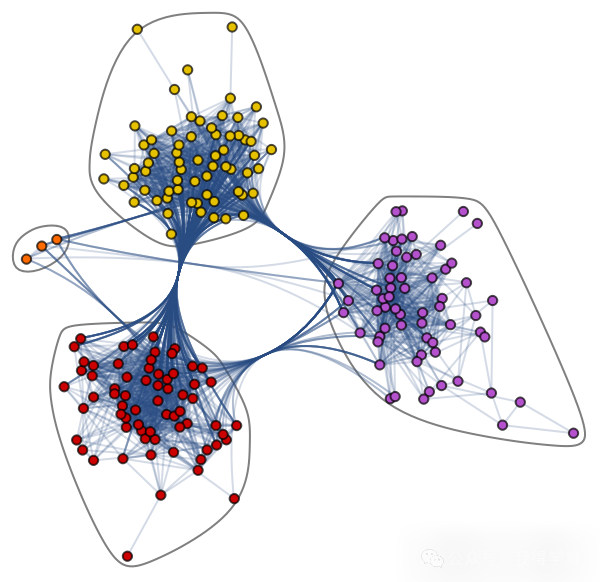

Overview of graph neural networks, graph basics and NetworkX graph creation, GNN types and challenges, plus a PyTorch spectral GNN example for node classification.